Why I Added an llms.txt File to My Site (and Why You Probably Should Too)

I recently added a file called llms.txt to my personal website.

If you’ve never heard of it, that’s fine. Most people haven’t. It’s not a standard yet. It’s not something Google Search Console is nagging you about. There’s no official documentation page with a shiny logo.

But it matters.

We’ve spent the last 20 years teaching machines how to crawl websites. Robots.txt told search engines where they could go. Sitemaps told them what mattered. Canonical tags told them which version to trust.

Now we’re in a new phase.

Large language models don’t just crawl. They read, summarize, quote, remix, and answer questions on your behalf. And most websites haven’t given them any guidance on how to do that responsibly.

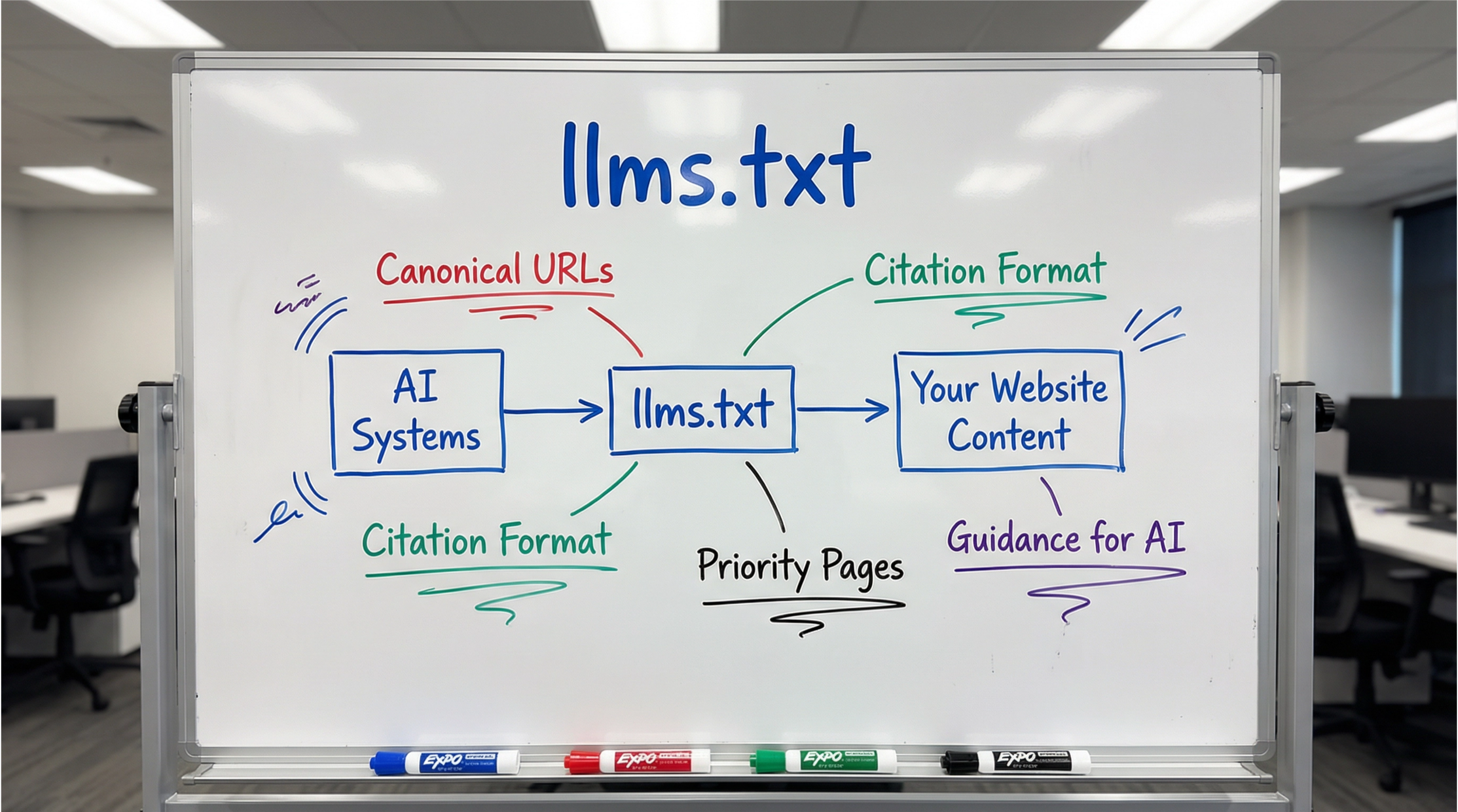

That’s where llms.txt comes in.

At its core, an llms.txt file is a set of instructions for AI systems. It tells them:

What pages represent the canonical truth of your site

What content should be prioritized

What content should be ignored or de-prioritized

How you prefer to be cited

What’s okay to summarize versus reproduce

Think of it as robots.txt for comprehension instead of crawling.

Why I cared enough to implement it

I write a lot about AI, nonprofit operations, digital transformation, and modern leadership. Increasingly, people are discovering my work not through Google links, but through answers generated by AI tools.

When that happens, a few things matter to me:

The right page gets referenced, not a tag archive or a duplicate URL

My words aren’t taken out of context

I’m cited correctly and consistently

Thin or low-value pages don’t become the “source of truth”

Without guidance, models guess. They follow links. They sometimes land on paginated views, tag indexes, or outdated summaries.

llms.txt is my way of reducing that ambiguity.

How this works on a Squarespace site

Squarespace doesn’t let you drop arbitrary files at the root of your domain. Uploaded files live under /s/.

So the actual file lives at:

/s/llms.txt

And I added a permanent redirect:

/llms.txt → /s/llms.txt

That way:

Humans can go to the expected location

AI systems can find it where they expect it

The redirect is explicit and permanent

It’s the same pattern people have used for years with security.txt, humans.txt, and similar files on Squarespace.

Simple. Clean. Predictable.

What I put in my llms.txt

The file itself is intentionally boring. That’s a feature.

It includes:

High-level guidance on canonical URLs

A short list of priority pages (home, about, blog, books, media)

Clear instructions to avoid tag pages, pagination, and query strings

A preferred citation format

A sitemap reference for discovery

No hype. No manifestos. Just guardrails.

The goal isn’t to control AI. That ship sailed.

The goal is to make it easier for AI systems to be accurate.

Why this is part of GEO, not a gimmick

There’s a lot of noise right now around “Generative Engine Optimization.”

Most of it is just SEO with new language.

But there is a real shift happening: discovery is moving from links to answers. From pages to synthesis. From “ten blue links” to “here’s what you need to know.”

Files like llms.txt are early infrastructure for that shift. They’re small, practical, and optional. But they signal intent.

They say:

“I care how my work is understood.”

“I care about attribution.”

“I’m building for humans and machines.”

That’s enough reason for me.